本文最后更新于:2024年7月24日 晚上

背景

边缘集群(基于 树莓派 + K3S) 需要实现基本的告警功能。

边缘集群限制

- CPU/ 内存 / 存储 资源紧张,无法支撑至少需要 2GB 以上内存和大量存储的基于 Prometheus 的完整监控体系方案(即使是基于 Prometheus Agent, 也无法支撑) (需要避免额外的存储和计算资源消耗)

- 网络条件,无法支撑监控体系,因为监控体系一般都需要每 1min 定时(或每时每刻)传输数据,且数据量不小;

- 存在 5G 收费网络的情况,且访问的目的端地址需要开通权限,且按照流量收费,且因为 5G 网络条件,网络传输能力受限,且不稳定(可能会在一段时间内离线);

关键需求

总结下来,关键需求如下:

- 实现对边缘集群异常的及时告警,需要知道边缘集群正在发生的异常情况;

- 网络:网络条件情况较差,网络流量少,只只能开通极少数目的端地址,可以容忍网络不稳定(一段时间内离线)的情况;

- 资源:需要尽量避免额外的存储和计算资源消耗

方案

综上所诉,采用如下方案实现:

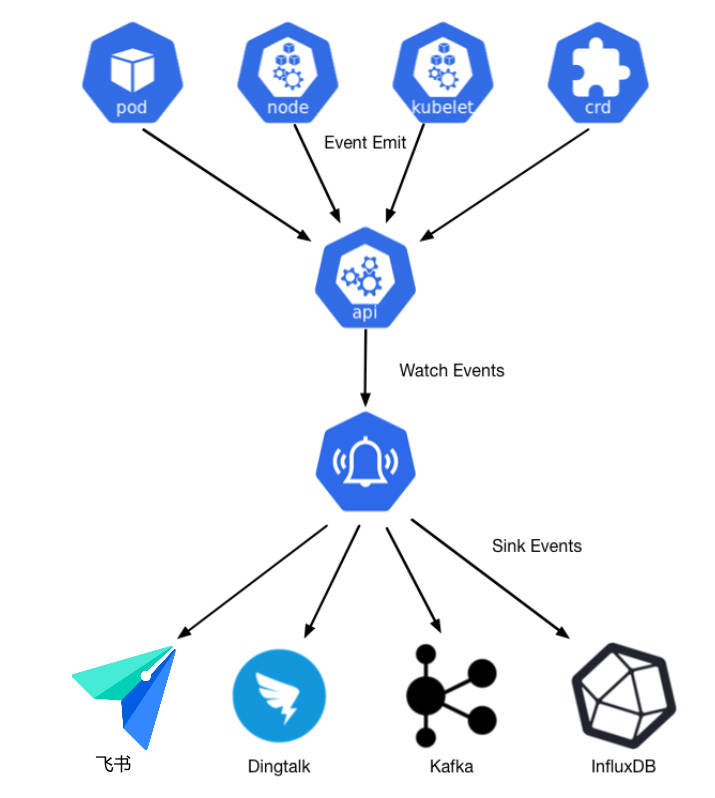

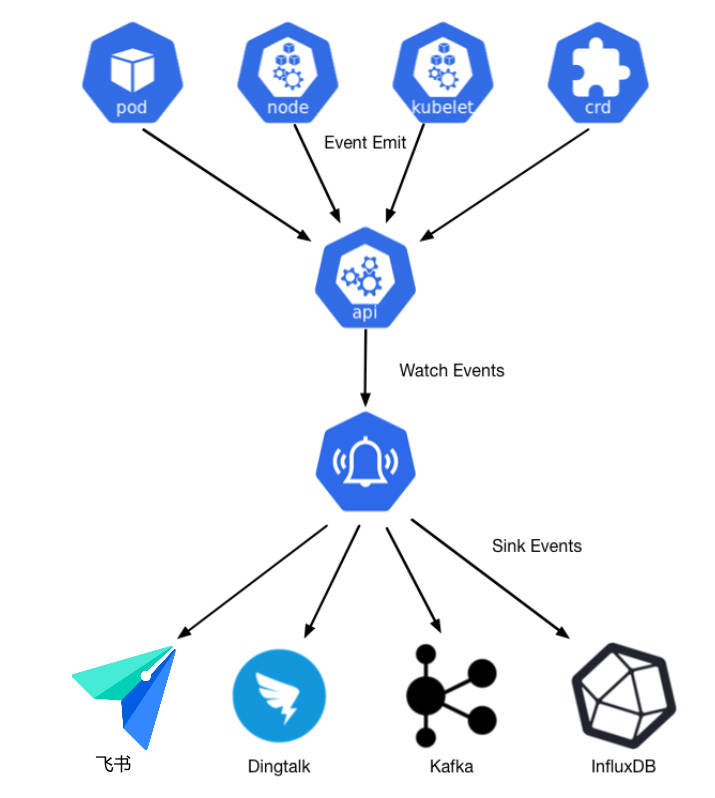

基于 Kubernetes Events 的告警通知

架构图

技术方案规划

- 从 Kubernetes 的各项资源收集 Events, 如:

- pod

- node

- kubelet

- crd

- …

- 通过 kubernetes-event-exporter 组件来实现对 Kubernetes Events 的收集;

- 只筛选

Warning 级别 Events 供告警通知(后续,条件可以进一步定义)

- 告警通过 飞书 webhook 等通信工具进行发送(后续,发送渠道可以增加)

实施步骤

手动方式:

在边缘集群上,执行如下操作:

1. 创建 roles

如下:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

| cat << _EOF_ | kubectl apply -f -

---

apiVersion: v1

kind: Namespace

metadata:

name: monitoring

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: event-exporter-extra

rules:

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- list

- watch

---

apiVersion: v1

kind: ServiceAccount

metadata:

namespace: monitoring

name: event-exporter

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: event-exporter

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: view

subjects:

- kind: ServiceAccount

namespace: monitoring

name: event-exporter

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: event-exporter-extra

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: event-exporter-extra

subjects:

- kind: ServiceAccount

namespace: kube-event-export

name: event-exporter

_EOF_

|

2. 创建 kubernetes-event-exporter config

如下:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

| cat << _EOF_ | kubectl apply -f -

apiVersion: v1

kind: ConfigMap

metadata:

name: event-exporter-cfg

namespace: monitoring

data:

config.yaml: |

logLevel: error

logFormat: json

route:

routes:

- match:

- receiver: "dump"

- drop:

- type: "Normal"

match:

- receiver: "feishu"

receivers:

- name: "dump"

stdout: {}

- name: "feishu"

webhook:

endpoint: "https://open.feishu.cn/open-apis/bot/v2/hook/..."

headers:

Content-Type: application/json

layout:

msg_type: interactive

card:

config:

wide_screen_mode: true

enable_forward: true

header:

title:

tag: plain_text

content: XXX IoT K3S 集群告警

template: red

elements:

- tag: div

text:

tag: lark_md

content: "**EventType:** {{ .Type }}\n**EventKind:** {{ .InvolvedObject.Kind }}\n**EventReason:** {{ .Reason }}\n**EventTime:** {{ .LastTimestamp }}\n**EventMessage:** {{ .Message }}"

_EOF_

|

🐾 注意:

endpoint: "https://open.feishu.cn/open-apis/bot/v2/hook/..." 按需修改为对应的 webhook endpoint, ❌切记勿对外公布!!!content: XXX IoT K3S 集群告警 : 按需调整为方便快速识别的名称,如:“家里测试 K3S 集群告警”

3. 创建 Deployment

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

| cat << _EOF_ | kubectl apply -f -

apiVersion: apps/v1

kind: Deployment

metadata:

name: event-exporter

namespace: monitoring

spec:

replicas: 1

selector:

matchLabels:

app: event-exporter

version: v1

template:

metadata:

labels:

app: event-exporter

version: v1

spec:

volumes:

- name: cfg

configMap:

name: event-exporter-cfg

defaultMode: 420

- name: localtime

hostPath:

path: /etc/localtime

type: ''

- name: zoneinfo

hostPath:

path: /usr/share/zoneinfo

type: ''

containers:

- name: event-exporter

image: ghcr.io/opsgenie/kubernetes-event-exporter:v0.11

args:

- '-conf=/data/config.yaml'

env:

- name: TZ

value: Asia/Shanghai

volumeMounts:

- name: cfg

mountPath: /data

- name: localtime

readOnly: true

mountPath: /etc/localtime

- name: zoneinfo

readOnly: true

mountPath: /usr/share/zoneinfo

imagePullPolicy: IfNotPresent

serviceAccount: event-exporter

affinity:

nodeAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100

preference:

matchExpressions:

- key: node-role.kubernetes.io/controlplane

operator: In

values:

- 'true'

- weight: 100

preference:

matchExpressions:

- key: node-role.kubernetes.io/control-plane

operator: In

values:

- 'true'

- weight: 100

preference:

matchExpressions:

- key: node-role.kubernetes.io/master

operator: In

values:

- 'true'

tolerations:

- key: node-role.kubernetes.io/controlplane

value: 'true'

effect: NoSchedule

- key: node-role.kubernetes.io/control-plane

operator: Exists

effect: NoSchedule

- key: node-role.kubernetes.io/master

operator: Exists

effect: NoSchedule

_EOF_

|

📝 说明:

event-exporter-cfg 相关配置,是用于加载以 ConfigMap 形式保存的配置文件;localtime zoneinfo TZ 相关配置,是用于修改该 pod 的时区为 Asia/Shanghai, 以使得最终显示的通知效果为 CST 时区;affinity tolerations 相关配置,是为了确保:无论如何,优先调度到 master node 上去,按需调整,此处是因为 master 往往在边缘集群中作为网关存在,配置较高,且在线时间较长;

自动化部署

效果: 安装 K3S 时就自动部署

在 K3S server 所在节点,/var/lib/rancher/k3s/server/manifests/ 目录(如果没有该目录就先创建)下,创建 event-exporter.yaml

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

| ---

apiVersion: v1

kind: Namespace

metadata:

name: monitoring

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: event-exporter-extra

rules:

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- list

- watch

---

apiVersion: v1

kind: ServiceAccount

metadata:

namespace: monitoring

name: event-exporter

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: event-exporter

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: view

subjects:

- kind: ServiceAccount

namespace: monitoring

name: event-exporter

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: event-exporter-extra

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: event-exporter-extra

subjects:

- kind: ServiceAccount

namespace: kube-event-export

name: event-exporter

---

apiVersion: v1

kind: ConfigMap

metadata:

name: event-exporter-cfg

namespace: monitoring

data:

config.yaml: |

logLevel: error

logFormat: json

route:

routes:

- match:

- receiver: "dump"

- drop:

- type: "Normal"

match:

- receiver: "feishu"

receivers:

- name: "dump"

stdout: {}

- name: "feishu"

webhook:

endpoint: "https://open.feishu.cn/open-apis/bot/v2/hook/dc4fd384-996b-4d20-87cf-45b3518869ec"

headers:

Content-Type: application/json

layout:

msg_type: interactive

card:

config:

wide_screen_mode: true

enable_forward: true

header:

title:

tag: plain_text

content: xxxK3S 集群告警

template: red

elements:

- tag: div

text:

tag: lark_md

content: "**EventType:** {{ .Type }}\n**EventKind:** {{ .InvolvedObject.Kind }}\n**EventReason:** {{ .Reason }}\n**EventTime:** {{ .LastTimestamp }}\n**EventMessage:** {{ .Message }}"

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: event-exporter

namespace: monitoring

spec:

replicas: 1

selector:

matchLabels:

app: event-exporter

version: v1

template:

metadata:

labels:

app: event-exporter

version: v1

spec:

volumes:

- name: cfg

configMap:

name: event-exporter-cfg

defaultMode: 420

- name: localtime

hostPath:

path: /etc/localtime

type: ''

- name: zoneinfo

hostPath:

path: /usr/share/zoneinfo

type: ''

containers:

- name: event-exporter

image: ghcr.io/opsgenie/kubernetes-event-exporter:v0.11

args:

- '-conf=/data/config.yaml'

env:

- name: TZ

value: Asia/Shanghai

volumeMounts:

- name: cfg

mountPath: /data

- name: localtime

readOnly: true

mountPath: /etc/localtime

- name: zoneinfo

readOnly: true

mountPath: /usr/share/zoneinfo

imagePullPolicy: IfNotPresent

serviceAccount: event-exporter

affinity:

nodeAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100

preference:

matchExpressions:

- key: node-role.kubernetes.io/controlplane

operator: In

values:

- 'true'

- weight: 100

preference:

matchExpressions:

- key: node-role.kubernetes.io/control-plane

operator: In

values:

- 'true'

- weight: 100

preference:

matchExpressions:

- key: node-role.kubernetes.io/master

operator: In

values:

- 'true'

tolerations:

- key: node-role.kubernetes.io/controlplane

value: 'true'

effect: NoSchedule

- key: node-role.kubernetes.io/control-plane

operator: Exists

effect: NoSchedule

- key: node-role.kubernetes.io/master

operator: Exists

effect: NoSchedule

|

之后启动 K3S 就会自动部署。

📚️Reference:

自动部署 manifests 和 Helm charts | Rancher 文档

最终效果

如下图:

📚️参考文档

'

'